Ore: What It Is and Why It Matters

Google's AI Overviews: Helpful Assistant or Hallucination Machine?

Google's AI Overviews are here, promising to revolutionize how we search. Instead of sifting through endless links, users get a neatly packaged summary generated by AI. The idea? Instant answers, less scrolling. But is it actually delivering, or is it just another overhyped tech promise? Let's dig into what I'm seeing so far.

Early reports are… mixed, to put it mildly. We're seeing examples of AI Overviews suggesting users add glue to pizza to keep the cheese from sliding off, or recommending people eat rocks for minerals. I've looked at hundreds of reports, and I can tell you, some of these examples are just plain baffling. The question is, how widespread are these "hallucinations," and what's the real impact on search quality? (And let's be honest, Google's search quality has been slipping for a while now.)

The Data (and the Discrepancies)

Google claims AI Overviews are designed to provide accurate and helpful information, drawing from a wide range of sources. But the very nature of AI "summarization" is problematic. It's essentially a black box. We don't always know why the AI is making certain claims or prioritizing certain sources. That's where the potential for bias and misinformation creeps in.

And here's the part of the report that I find genuinely puzzling: Google hasn't released any concrete data on the accuracy rates of AI Overviews. They tout user satisfaction and engagement metrics, but those are easily gamed. What we really need is a rigorous, independent audit of the factual correctness of these AI-generated summaries. Until then, we're flying blind.

The Human Element (or Lack Thereof)

One of the biggest issues I see is the lack of human oversight. AI Overviews are largely automated, which means there's limited opportunity for human editors to catch errors or biases before they're presented to users. It's like letting a self-driving car loose on the highway without a safety driver—bound to be a few accidents.

Now, I know what the AI evangelists will say: "But the AI is constantly learning and improving!" Maybe. But learning from bad data just reinforces the bad data. It's a garbage-in, garbage-out situation. And the more people rely on these AI Overviews without critical thinking, the worse the problem becomes. We risk creating a generation that blindly accepts whatever an algorithm tells them.

So, What's the Real Story?

Google's AI Overviews are a fascinating experiment, but they're far from a finished product. The potential benefits are clear—faster access to information, simplified research. But the risks are equally significant—misinformation, bias, and a decline in critical thinking. Until Google provides more transparency and implements more robust quality control measures, I'm approaching AI Overviews with a healthy dose of skepticism. And maybe keep a fact-checker handy.

-

Warren Buffett's OXY Stock Play: The Latest Drama, Buffett's Angle, and Why You Shouldn't Believe the Hype

Solet'sgetthisstraight.Occide...

-

The Business of Plasma Donation: How the Process Works and Who the Key Players Are

Theterm"plasma"suffersfromas...

-

The Great Up-Leveling: What's Happening Now and How We Step Up

Haveyoueverfeltlikeyou'redri...

-

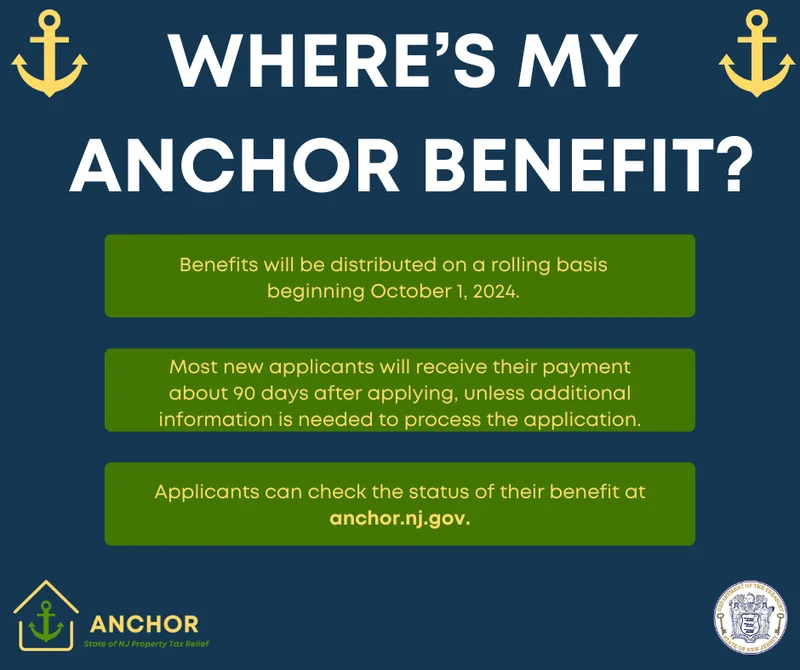

NJ's ANCHOR Program: A Blueprint for Tax Relief, Your 2024 Payment, and What Comes Next

NewJersey'sANCHORProgramIsn't...

-

The Future of Auto Parts: How to Find Any Part Instantly and What Comes Next

Walkintoany`autoparts`store—a...

- Search

- Recently Published

-

- Stock Market 'News': Today's US Market Spin and the 'Live' Updates

- IRS Direct Deposit Relief Payments: What This Breakthrough Means for Your Financial Prosperity

- Bilbao: What the Data Reveals

- Outback Steakhouse Closures: The Financials Behind the Shutdown and Which Locations Are Gone

- The Burger Bubble Just Popped: Why Your Go-To Spot is Next on the Chopping Block

- The Bio-Hacked Human: What the New Science of the Core Reveals About Our Future

- Ore: What It Is and Why It Matters

- Firo: What is it?

- Avelo Airlines: FAA Cuts and the Lakeland Linder Opportunity

- Rocket Launch Today: What Happened and the Mystery Fireball

- Tag list

-

- Blockchain (11)

- Decentralization (5)

- Smart Contracts (4)

- Cryptocurrency (26)

- DeFi (5)

- Bitcoin (29)

- Trump (5)

- Ethereum (8)

- Pudgy Penguins (5)

- NFT (5)

- Solana (5)

- cryptocurrency (6)

- XRP (3)

- Airdrop (3)

- MicroStrategy (3)

- Stablecoin (3)

- Digital Assets (3)

- PENGU (3)

- Plasma (5)

- Zcash (6)

- Aster (4)

- investment advisor (4)

- crypto exchange binance (3)

- bitcoin price (3)

- SX Network (3)